Google's very much alive, approaches to monetising AI, and Apple's tough spot

19 May 2024 | Issue #21 - Mentions $GOOG, $AMZN, $BABA, $BIDU, $TCEHY, $MSFT, $AAPL

Welcome to the twenty-first edition of Tech takes from the cheap seats. This will be my public journal, where I aim to write weekly on tech and consumer news and trends that I thought were interesting.

Let’s dig in.

Reports of Google’s death are greatly exaggerated

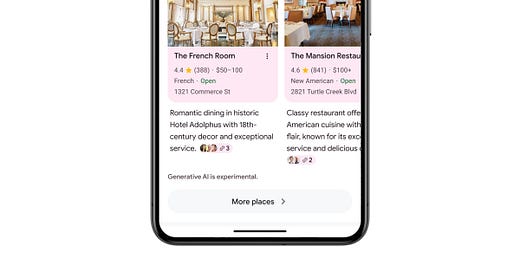

Google conducted its annual developer conference, I/O, this week, where it unveiled new AI features set to launch. For a summary, click here. The most impactful feature in the near-term is "AI Overviews," previously known as "Search Generative Experience." It will be available to everyone in the US this week.

There had (still has?) been a narrative surrounding the company that it will struggle in the age of AI because of its monopoly hold on ads in the search engine advertising market and that GenAI responses won’t be as lucrative as ‘ten blue links’. I tried to keep an open mind about what future search would look like, and pondered that if AI-embedded search results in incremental queries made, it would be additive to the company’s bottom-line.

Now with Google announcing its broad roll-out to US consumers, this increasingly looks to be the likely outcome (under the assumption that Google is only doing so because it’s not dilutive to the core business). Indeed, at their latest earnings call CEO Sundar Pichai confirmed as much.

“AI innovations in Search are the third and perhaps the most important point I want to make. We have been through technology shifts before, to the web, to mobile, and even to voice technology. Each shift expanded what people can do with Search and led to new growth. We are seeing a similar shift happening now with generative AI. For nearly a year, we've been experimenting with SGE in search labs across a wide range of queries. And now we are starting to bring AI overviews to the main Search results page. We are being measured in how we do this, focusing on areas where gen AI can improve the search experience while also prioritizing traffic to websites and merchants. We have already served billions of queries with our generative AI features. It's enabling people to access new information, to ask questions in new ways and to ask more complex questions. Most notably, based on our testing, we are encouraged that we are seeing an increase in search usage among people who use the new AI overviews as well as increased user satisfaction with the results.”

Shares have now re-rated from the lows of the year to 21x NTM P/E but are still below market multiples. There are still question marks on what the future of search looks like but what’s clear is that management team are taking the necessary steps to ensure they’re not left behind. It’s going to continue to be an ever evolving space.

Executive turnover in AI

This week came with news of a couple of high profile executives announcing their departure from key roles. AWS CEO Adam Selipsky announced he was moving on to his next challenge after sales at the unit accelerated to reach an $100bn annual run-rate, while OpenAI co-founder and chief scientist Ilya Sutskever posted on X that he was leaving the company.

I had previously written about Amazon's approach to GenAI and their early missteps. Internet speculation had suggested that Adam was removed due to a 'missing AI'. However, the timeline of these events seems to coincide with Andy's tenure. For instance, OpenAI first approached AWS in 2018, and in 2021, AWS turned down the opportunity to invest in Anthropic (although Andy was in the photo-op when they finally partnered with Anthropic). It's unclear whether Adam was responsible. Adam's main AI strategy appeared to be providing customers with a choice of models, arguing against integration with just one. This strategy is now adopted by all three hyperscalers.

According to the Information, Adam’s replacement Matt Garman was also cautious in the early stages of the AI boom.

As the AI boom was starting, some of Garman’s colleagues sensed he didn’t fully understand why so many young startups were raising tens of millions of dollars from investors to rent the Nvidia chips from AWS and other cloud providers. On a few startup deals, Garman questioned the high valuations venture investors were giving to the companies as well as their business models and whether they would be able to pay to rent AI-chip servers from AWS over a long period, according to a person who spoke to him at the time.

That may be one reason why Garman was among the senior AWS leaders, in addition to Selipsky, who cautioned against signing sweetheart cloud deals with AI startups several years ago, when the promise of conversational AI was less apparent, this person said. In 2021, AWS turned down the opportunity to invest in Anthropic, which wanted to forge closer ties with the cloud provider in the same way Microsoft had gotten close to OpenAI. AWS later committed $4 billion to Anthropic, but it missed out on the opportunity to ink a more exclusive partnership. Anthropic has committed to spending billions of dollars on Google Cloud, and Google can also sell the startup’s AI models to its customers.

Forging an earlier, closer relationship with a company like Anthropic could have put AWS in a better position to launch generative AI products more quickly, said two people who have worked on AI strategy at the company. It also might have helped AWS avoid being caught flatfooted by OpenAI’s swift rise.

On Ilya, I guess it isn’t too surprising given his involvement in the coup from November. He is regarded as one of the leading researchers in deep learning and AI, so it is a big loss for OpenAI.

Different approaches to monetising AI

It was interesting to read in the FT about how China’s AI companies are approaching monetisation.

China’s artificial intelligence groups are selling “AI-in-a-box” products for companies to run on their own premises, in a threat to the AI cloud computing services offered by the country’s big tech groups such as Alibaba, Baidu and Tencent.

Huawei has signed up more than a dozen AI start-ups to bundle and market their large language models with its AI processors and other hardware. Its partners include groups such as Beijing-based Zhipu AI and language specialist iFlytek.

Chinese groups are deploying the boxes to bring the advances of generative AI to on-premise, or private cloud, set-ups, which account for about half of the cloud market in the country.

Huawei estimates the Chinese market for “all-in-one machines”, as they are known locally, will hit Rmb16.8bn ($2.3bn) this year. Analysts at Minsheng Securities forecast the government market for AI boxes could reach Rmb450bn by 2027.

Liu Qingfeng, iFlytek founder, said at a product launch event for its AI box last year that the company’s “all-in-one machine has top-notch performance, is safe and controllable and is ready to use right out of the box”.

The moves diverge from how AI is being commercialised in the west and capitalises on concerns at Chinese companies about protecting their data.

The trend could crimp the ambitions of tech giants that have invested heavily in building AI infrastructure and large language models that can be sold as a service to customers over the cloud. Baidu’s Robin Li has laid out a vision of hundreds of AI apps running on top of the company’s foundational models. The proliferation of AI-in-a-box could also solidify the split of the Chinese cloud market.

Chinese companies are prioritizing privacy and data protection, even though running private clouds is less efficient and more expensive due to lower hardware utilization.

Other articles from the FT on AI monetization have compared the costs of various models. They emphasize the significant differences in smaller language models.

Despite being much lower in cost, performance of the smaller 8bn Llama 3 model is comparable to GPT-4, while Microsoft’s 7bn Phi-3 outperformed GPT 3.5. As performance/cost gets better over time and these models get deployed across applications, it becomes easier to see the return on investment from these tech companies.

On a related note, Bill Gurley said something on the latest BG^2 podcast that I thought was interesting.

“I found myself feeling like both OpenAI and Google were tilting more towards the consumer. There's been a lot of talk, is this about the API and enterprise? Is this about the consumer? Where are they going to make money? Where are they going to focus?”

He mentioned this after they spoke about the latest voice models that OpenAI and Google both launched. They then go on to discuss the consumer AI vs enterprise AI landscape which is worth a listen.

The battle seems to be shifting towards the consumer. This is logical, as history shows us that 'killer apps' scale fastest via the consumer. Once scale is achieved, reinforcement learning through human feedback (RLHF) improves, forming a virtuous cycle. This is likely why Microsoft hired Mustafa Suleyman as head of its AI division, focusing on consumer apps. With Anthropic's mobile app launch proving relatively underwhelming, the company is likely hoping that Instagram co-founder Mike Krieger, their new chief product officer, will improve their fortunes.

Apple’s AI strategy

We will know for sure in a few weeks at WWDC but Mark Gurman had a good newsletter outlining Apple’s strategy in AI.

Of course, Apple has some advantages of its own — money, talent and a powerful platform — and should at least be able to make it a closer game. But it will require a meaningful change to its strategy, as well as some help from its AI competitors.

Though Apple might disagree, the company’s AI-related features like Siri have been stymied by an outsized reliance on processing information on the iPhone itself and a lack of data collection. Apple does both these things deliberately, part of its efforts to protect privacy and security. But they don’t always make for the best experience.

The good news is, Apple has an opportunity to start fresh. The company is unveiling new generative AI features at its Worldwide Developers Conference on June 10 and is poised to make some bold changes.

Though the company will still rely on the on-device approach — with its own large language models powering AI features on phones and computers — it’s also planning to deliver services via the cloud. As I’ve reported, Apple is putting high-end Mac chips into its data centers to handle these online features.

TLDR:

Apple is in a challenging position compared to other major tech companies that have a head start. Despite its advantage of distribution across more than 2 billion devices, Apple has the lowest gross margins among its peers and no apparent way to profit from AI. If Apple were to undergo a major investment cycle in capital expenditure, its margins would likely suffer. This is not helped by the fact that Apple has underinvested in data centres, especially as Nvidia assigns GPUs based on relationship strength. The fact that Apple hasn’t undergone a major capex cycle may suggest they’re looking to outsource LLMs to 3rd parties given cloud-powered LLMs would require much more data centres for inference. We will see.

Some analysts predict that this year's iPhone updates will trigger a consumer upgrade cycle. However, historically, software updates haven't typically led to phone upgrades since they can be deployed across the existing user base. Coupled with Apple's lagging AI capabilities, it's uncertain whether consumers will be eager to buy the next iPhone. This approach hasn't been successful for Google's Pixel or Samsung's Galaxy either (limiting AI capabilities to the most expensive models).

That’s all for this week. If you’ve made it this far, thanks for reading. If you’ve enjoyed this newsletter, consider subscribing or sharing with a friend

I welcome any thoughts or feedback, feel free to shoot me an email at portseacapital@gmail.com. None of this is investment advice, do your own due diligence.

Disclosures: I am long Google, Amazon, Tencent, Microsoft and short Apple.

Tickers: GOOG 0.00%↑, AMZN 0.00%↑ , BABA 0.00%↑ , BIDU 0.00%↑ , $TCEHY, MSFT 0.00%↑ , AAPL 0.00%↑